Most people do not recognize how much of our daily lives is run by algorithms. Sure, obvious things like Google Maps directions make sense, but do you know that most of your cultural and social experience is now driven by algorithms?

The specific algorithm I'm speaking of is the recommendation engine. The goal of this algorithm is to provide a person with a dataset customized to that person's taste. Let's look at an example.

Have you ever used Pandora or Spotify or any other internet music provider? These are the clearest examples of recommendation engines.

When you use Pandora, you're asked to create a new channel by searching for a song or artist. You then have "random" songs play after that, and you're allowed to Thumbs Up or Thumbs Down a given song. The more preferences you provide, the better it is at providing songs you enjoy.

But how does that work? Out of millions of songs, how does Pandora know that if you like Queen you might also like ACDC?

The Basic Algorithm

For those not familiar with computer science, the term algorithm simply refers to a strategy to solve a problem. The basic tenet of computer science is that any problem can be quantified into some kind of logic-and-math strategy. From displaying the pretty graphics in video games to transmitting Tweets to your followers, it's all just math and strategy.

For Pandora, it works something like this:

You create a new station by naming an artist or song. We'll go with Queen because that's my go-to Pandora station. The system uses that as a starting point.

For simplicity, let's say that all music can be defined by two properties: how rocky it is and how melodic it is. In the case of Pandora, I'm sure they have tons of properties, but we'll simplify it to these two.

We can then score every artist on these two properties on a scale from -10 to 10. Let's give Queen a rock level of 7 and a melodic level of 5. This can be graphed!

You can also graph a ton of other artists:

Two notes: I didn't think too hard about the classification and my musical knowledge ends around 2001.

You can probably see where this is going. Based purely on the measurement of rock and melody, if you like Queen, you're probably likely to enjoy Red Hot Chili Peppers, Bon Jovi, and Green Day, and you're probably not going to like Snoop Dogg or Celine Dion.

Recommendation Drift

An interesting thing can happen, though, when you introduce the idea of Thumbs Up and Thumbs Down: your recommendations can drift.

In a recommendation engine, each person has a thumbprint. It's basically your current location on that graph above. Because you seeded the station with Queen, you start at the same location as Queen.

Because it can't play Queen songs all day, the algorithm selects a song by a nearby artist. Let's say it selects Basketcase by Green Day. It's a cool song, so you give it a Thumbs Up. That pushes your location toward Green Day.

The algorithm then selects another song. Perhaps it reaches a bit wider out and selects Shook You All Night Long by ACDC. It's a fine song, but you're not in the mood for it, so you give it a Thumbs Down. That pushes you away from ACDC.

Finally, you're now in range of Sublime and the algorithm selects Santeria. You don't give it a Thumbs Up or a Thumbs Down, but you listen to the whole song. That's considered a soft like, so it nudges ever so slightly toward Sublime.

This pattern continues, testing different artists until it finds your stable spot. Eventually, you'll settle in that nice sweet spot somewhere between What I Got and Don't Stop Me Now. Pandora now knows your musical taste, and it continues to feed you those songs forever.

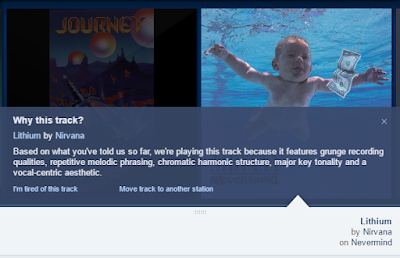

We measured only two properties here for ease of graphing, but this goes out to dozens of properties. This is all exposed to you as a user. On Pandora's website, you can choose "Why Was This Track Selected", and you'll see something like this:

These are the next few songs that played:

Apparently my thumbprint is solidly near Major Key Tonality and Melodic Songwriting. That sounds about right.

All this is powered by that little Thumbs Up button.

Social, Cultural, and News Recommendations

What does all this have to do with the 2016 Election? This same algorithm is running in your entire digital life.

Have you ever noticed that you see posts by the same friends frequently, but you don't always see posts from other people? Facebook's recommendation engine is determining which posts you see.

Recognize this guy?

Yup, it's a Thumbs Up. This same button is determining your social and cultural tastes in the exact same way Pandora determines you musical tastes.

Facebook then uses that data to determine which posts to deliver to you. What if we took Facebook posts and graphed them like we did above, but on the scale of Liberal/Conservative and Dramatic/Boring? It'd look something like this:

Note: I didn't think a lot about the location of these dots. I'm not editorializing.

Where do you think your thumbprint dot lands? Facebook will deliver stories to you that it believes you'd prefer to read.

And it's not based entirely on the Like button. Any time you interact with a post -- comment on it, share it, heck probably even stop to read it, that registers as an interaction. Each interaction modifies your thumbprint such that it can deliver more finely-tuned posts.

This isn't just Facebook -- this is everything. Google News, for example, uses a recommendation engine to give you news. Here's what mine looks like:

Google knows me really well.

This is true for the posts you see on Reddit, the items you see for sale on Amazon, and the ads you see on Youtube.

In essence, everything you see and experience is customized for you. Put another way, nobody else sees or experiences the same thing you do on the internet. Your social and cultural reality is distorted in such a way that it reinforces your personal tastes.

You Won't Believe What I'm About to Say

In general, this is fairly innocuous if not outright desired. What's wrong with hearing your favorite music or getting D&D news delivered to you?

The trouble is that most of these free services we enjoy online have to make their money somehow. In the case of news sites, that money is purely from advertisement, and that advertisement is paid by the impression. The more people who visit the site, the more money they make. That is not a bad business model in itself, but the profit margin is razor thin and competition fierce.

This pressure has led these companies down a path of rapid evolution. Using a combination of user analytics (i.e. tracking what you do) and psychology, news sites have developed the ultimate weapon: clickbait.

Clickbait is a headline that sounds so outrageous or interesting that it breaks your brain and you have to click on it. Here are some examples:

They're almost always misleading and usually have missing information combined with some incredulous statement like "You'll never believe #3!". What is number three!? I have to know!

I won't get into the why or the history of Clickbait, but suffice to say that it works. This wasn't some accident, either. They have been intentionally optimized by the aforementioned user analytics and psychological research to get you to click.

Unfortunately, outrageous headlines aren't reserved for celebrity gossip and top ten lists. Legitimate news topics are subject to this as well.

Look at this post from the Gawker network:

You want to click that, don't you? It's an outrageous premise and evokes an emotion, plus the picture strikes a chord. I'll admit that when this showed on my feed, I totally fell victim and clicked it. It was a trite article, as they always are, but it still left an impression on me.

However, the fact is that I clicked it. That gave Gawker their $0.001 and left a mark on my digital thumbprint that I like articles like that. This incentivizes Gawker to make more of these articles and causes the recommendation engines in our lives to deliver more of these articles and fewer traditional news articles. Websites are optimizing toward what we're willing to click on, and recommendation engines are blindly believing that's our preference.

This is the problem. There is a feedback effect here that has been running rampant for the last decade and has caused our political discourse to fall apart. You're literally being delivered only the content that the recommendation engine believes you want to see, and you're giving it Thumbs Up actions based on headlines purposefully engineered to get you to click them.

Remember when I mentioned the recommendation drift earlier? When you combine recommendation engines with Clickbait headlines to outrageous content, the content you see becomes more outrageous. The articles you see will literally drift more partisan and more outrageous over time. You clicked it, so the algorithm believes you like it!

In other words, if you wanted Hillary to win, the news you saw was customized based on your preference for Hillary to win, and you were mostly fed the most outrageous form of that content. Same if your preference was Trump.

Think Critically

So how do we fight this? It's simple: think critically.

Stop giving into your own indulgences and automatically believing the news that matches your own worldview. Do you think Hillary is a criminal? When you see an article saying Hillary's going to jail due to the latest email leak, skip the editorial and go straight to the leaked email itself. Hint: Most of those emails were fairly innocuous.

When you see an image on Twitter of the KKK openly celebrating Trump's victory, confirm that the image is actually what it claims to be before reacting. Hint: That image neither included KKK members nor was it taken after the election.

Check sources. Think critically. Find fault in arguments you agree with. Use Snopes.com. Seek a variety of sources. Ignore question headlines. Ignore clickbait headlines. Every time you visit DailyKos, end it with a visit to Drudge. Better yet, avoid both of those sites.

Most importantly, be relentlessly suspicious.

It's not easy to be relentlessly suspicious of what you agree with, but this is the world we live in. Recommendation engines determine the social and cultural information that we see in our digital lives. If you're not relentlessly suspicious, you'll fall into the same echo chamber so much of the nation fell into during this last election.

Yes, that echo chamber is comfortable, but it's a false comfort.

Awesome explanation Matt

ReplyDeleteMakes sense. Good article, Matt. Should I have clicked on it, though?

ReplyDelete